knitr::opts_chunk$set(echo = FALSE, tidy = TRUE, cache = FALSE, message = FALSE, WARNING = FALSE)

# Very standard packages

library(graphics)

library(ggplot2)

library(tidyverse)

library(knitr)

library(MASS)

# Not so standard

library(gridExtra) # for grid.arrange(), grids of plots in ggplot without facet_grid

library(ggpubr) #for stat_cor function which adds correlation coefficient to ggplot

library(energy) # distance correlation function and t-test in here

library(scatterplot3d) # for easy/boring scatterplots that work in PDF knit

# Good for running

library(ggstatsplot)

# Globally changing the default ggplot theme.

## store default

old.theme <- theme_get()

## Change it to theme_bw(); i don't like the grey background. Look up other themes to find your favorite!

theme_set(theme_bw())3 Measuring Association

3.1 Getting Started

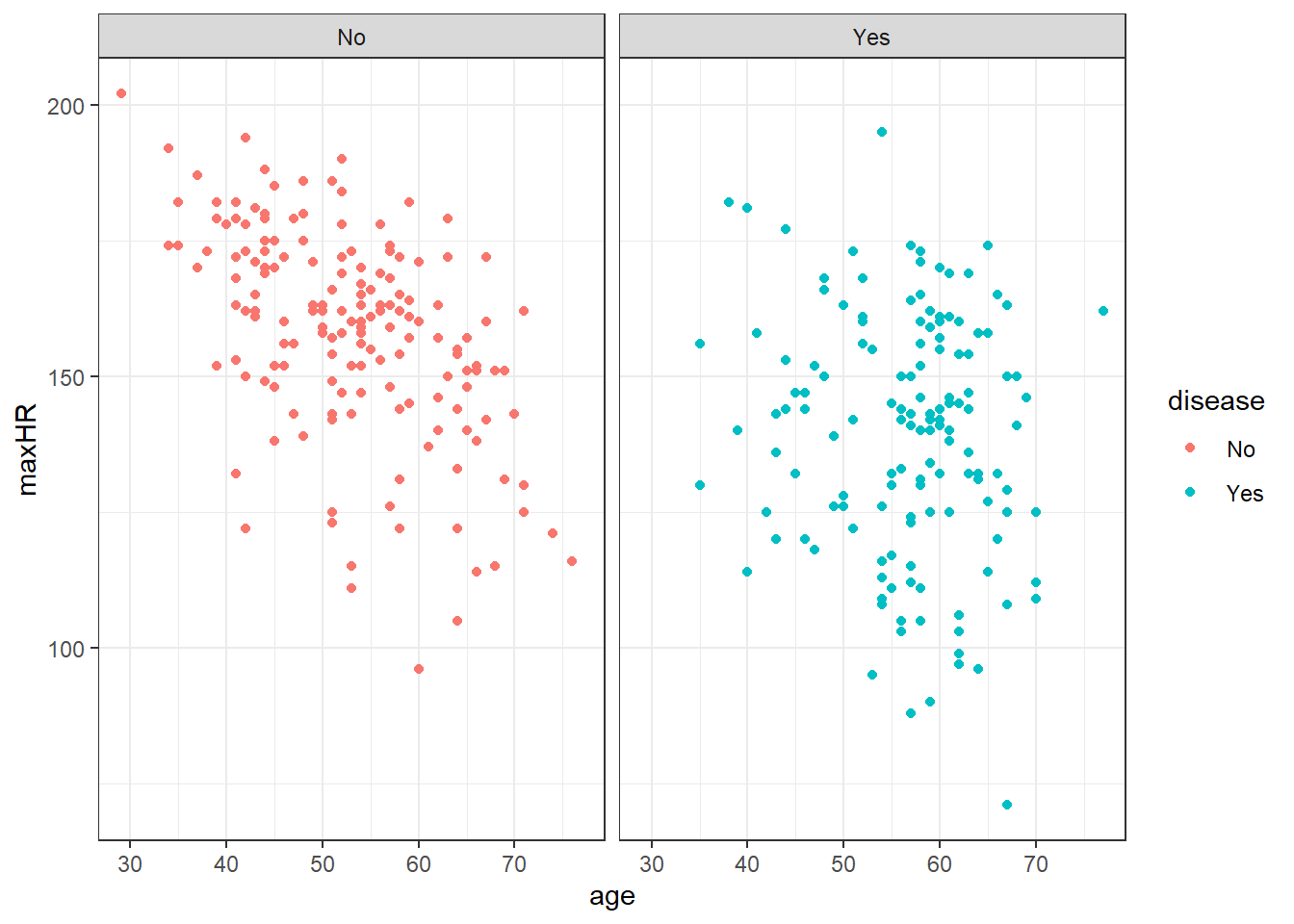

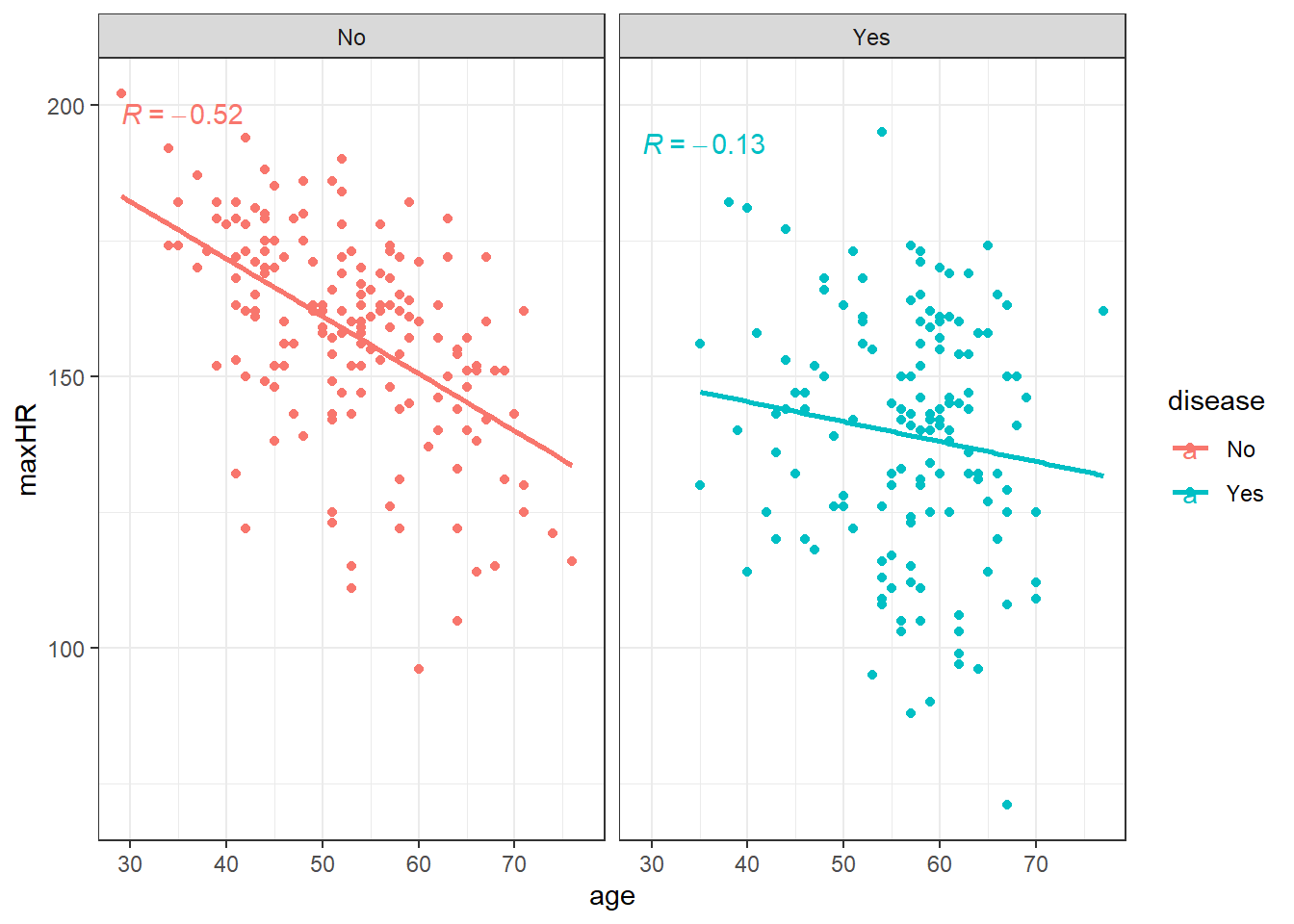

Let’s look back at that heart data.

3.2 Linear Correlation

We refer to the strength of relation between two variables to be their correlation. There are a few common ways to measure correlation. The most common is the following.

Pearson product-moment correlation:

\[ r = \frac{\sum_{i=1}^n {\left(X_i - \bar{X}\right)\left(Y_i - \bar{Y}\right)} }{ \sqrt{\sum_{i=1}^n {\left(X_i - \bar{X}\right)^2}\sum_{i=1}^n{\left(Y_i - \bar{Y}\right)^2}}} \]

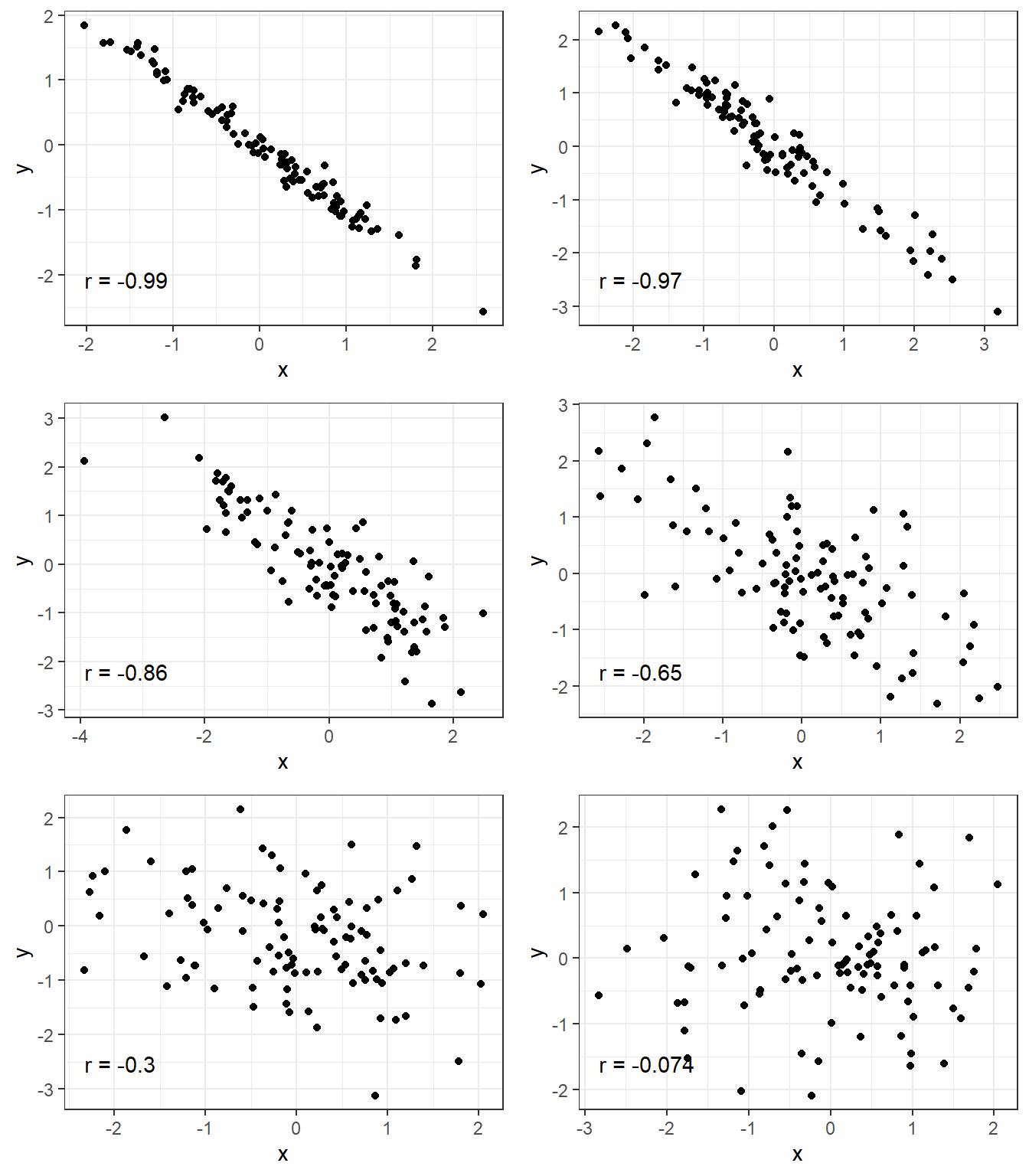

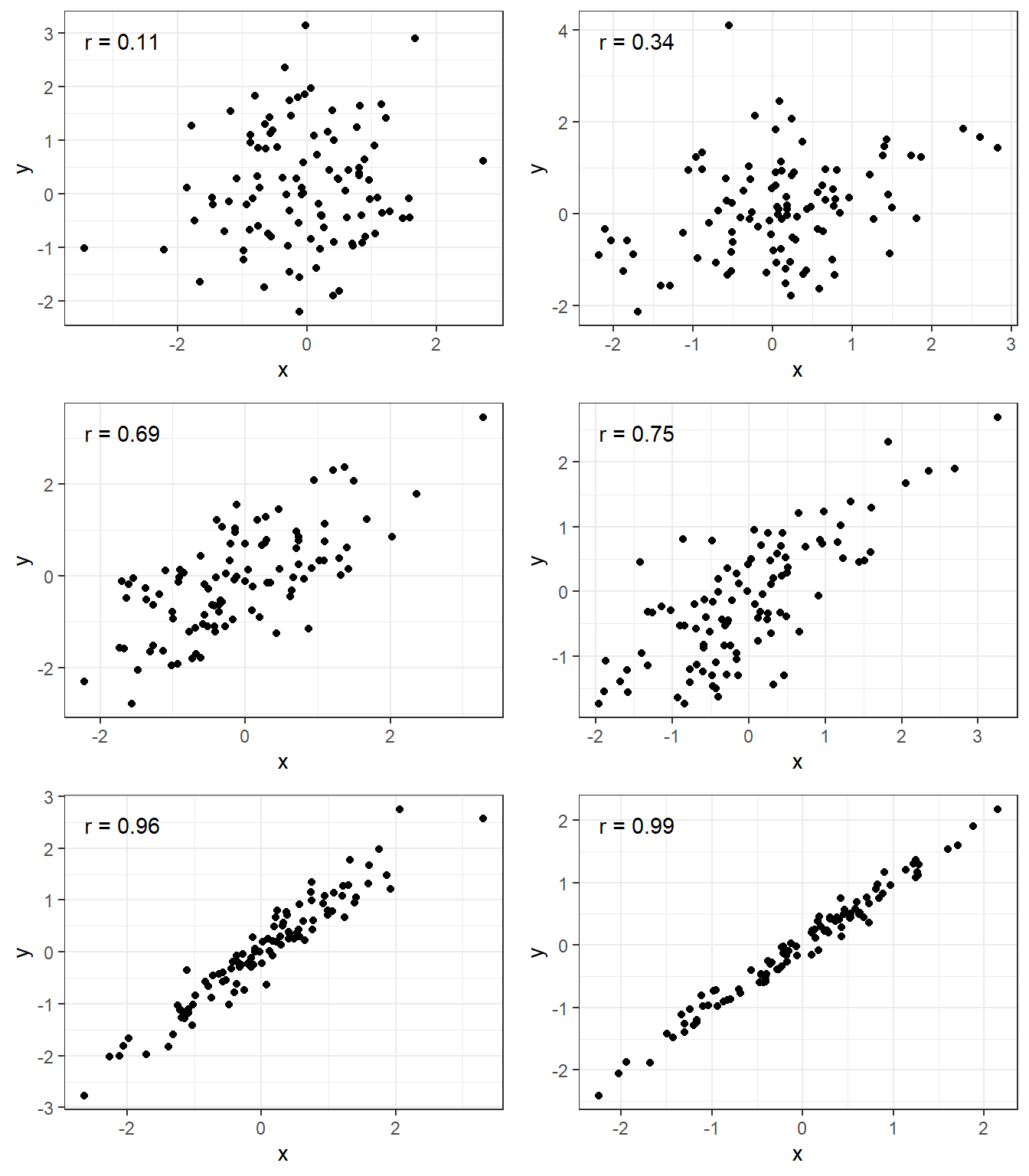

Here are some of the common properties of \(r\):

- It can take on a value from -1 to 1.

- If it is negative, then there is a “negative” relation between \(x\) and \(y\) which means as \(x\) increases, \(y\) decreases.

- If it is positive, then there is a “positive” relation between \(x\) and \(y\) which means as \(x\) increases, \(y\) increases.

- The closer to -1 or 1, the closer the \(x\) and \(y\) observations follow a straight line.

- The above calculation is an estimate of what is the true correlation between two random variables/populations \(X\) and \(Y\).

- This true correlation is denoted by \(\rho\)

- Thus, \(r\) is a point estimate (remember that term?) of \(\rho\) (i.e., it is \(\hat \rho\)).

3.2.1 Correlation Strength Examples

3.2.2 Linear correlation of the heart data

3.2.3 Correlation does not imply causation

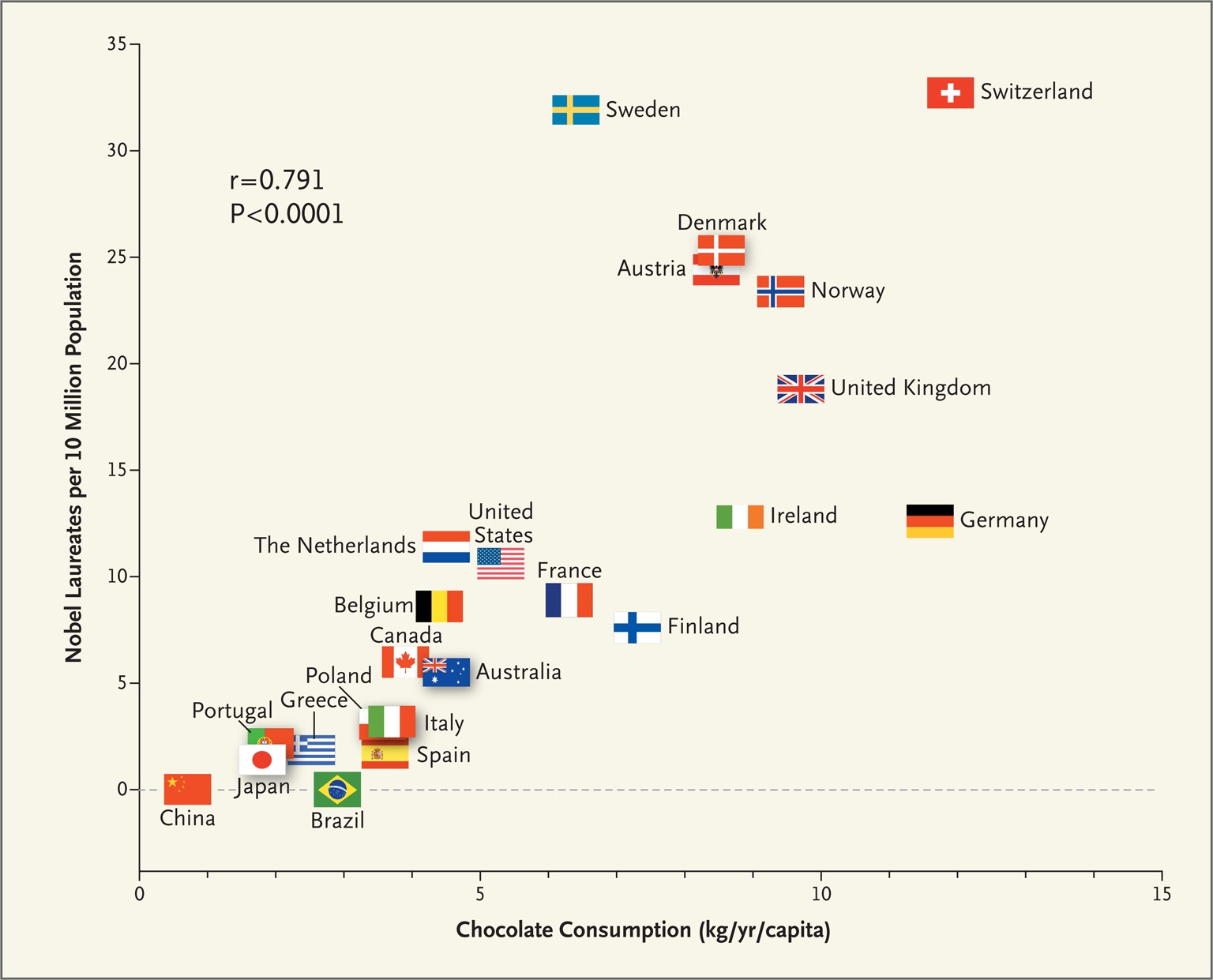

Messerli, F. H. (2012). Chocolate Consumption, Cognitive Function, and Nobel Laureates. New England Journal of Medicine, 367(16), 1562-1564. doi:10.1056/nejmon1211064

The author tried to assert that this point towards the idea that chocolate increases cognitive function.

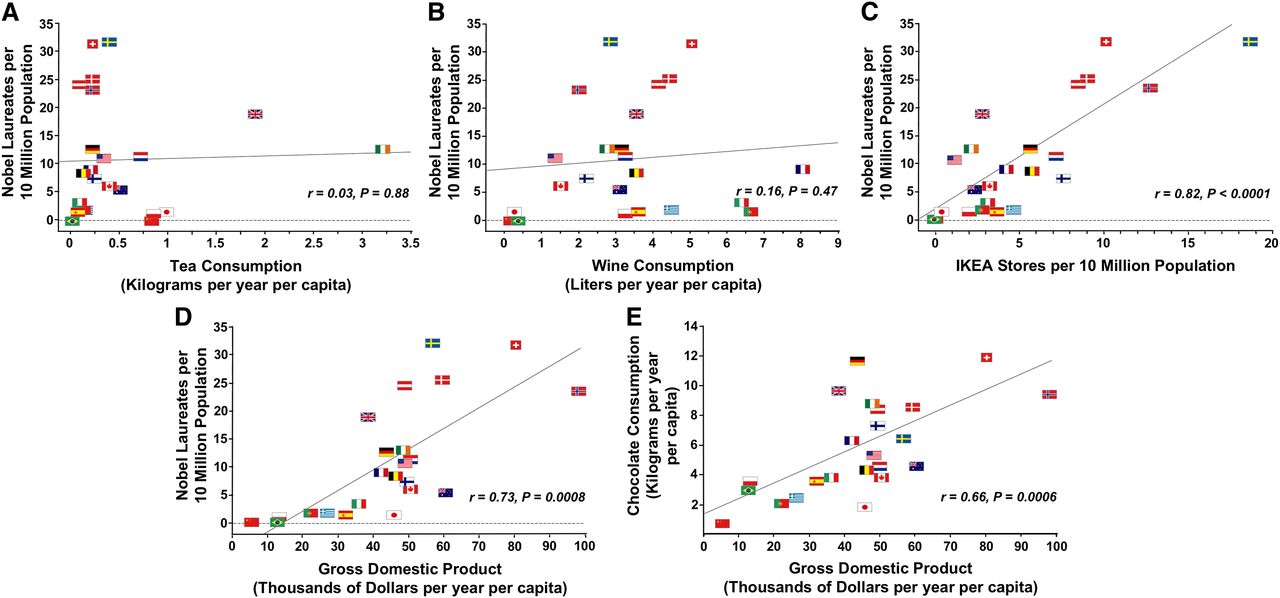

A rebuttal from various authors produced the following graphs. What might be in common?

3.2.4 cOrReLlAtIoN dOeS nOt ImPlY cAuSaTiOn

I feel like this is a fall back phrase for those that just want some sort of easy yes/no kind of answer. “It’s a correlation? Then this result is worthless.” This is incredibly lazy logic.

Do not dismiss correlations out of hand.

Use them to ask questions!

Correlation may not imply causation but it does imply a connection.

Finding the connection the cause of the non-cause would be quite interesting in a lot of scenarios.

Correlation \(\neq\) causation can be abused.

An article from the Science Based Medicine blog says:

“For example, the tobacco industry abused this fallacy to argue that simply because smoking correlates with lung cancer that does not mean that smoking causes lung cancer. The simple correlation is not enough to arrive at a conclusion of causation, but multiple correlations all triangulating on the conclusion that smoking causes lung cancer, combined with biological plausibility, does.”

It should be noted that other methods can, and should, be used to derive causation

3.3 Non-linear correlation

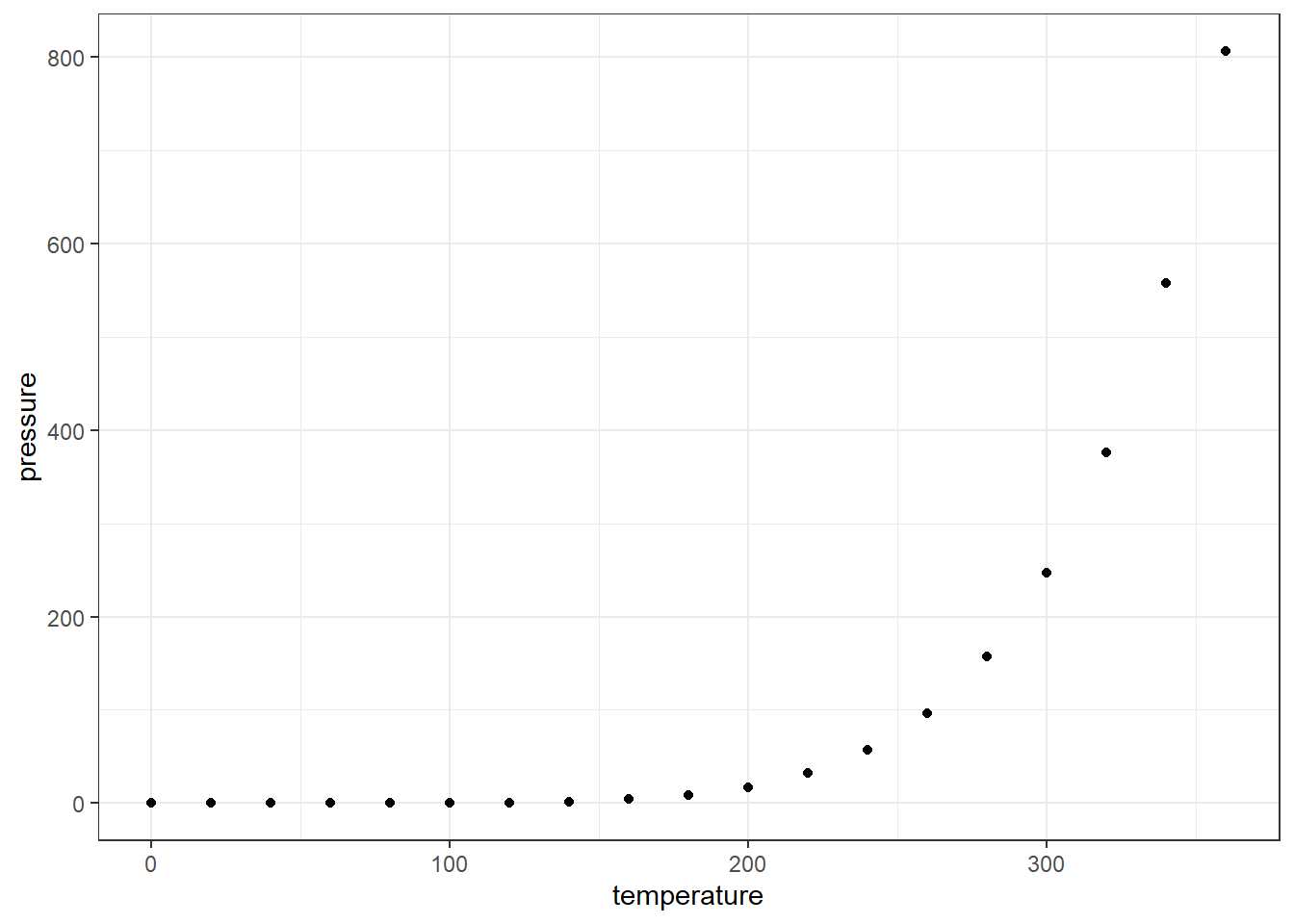

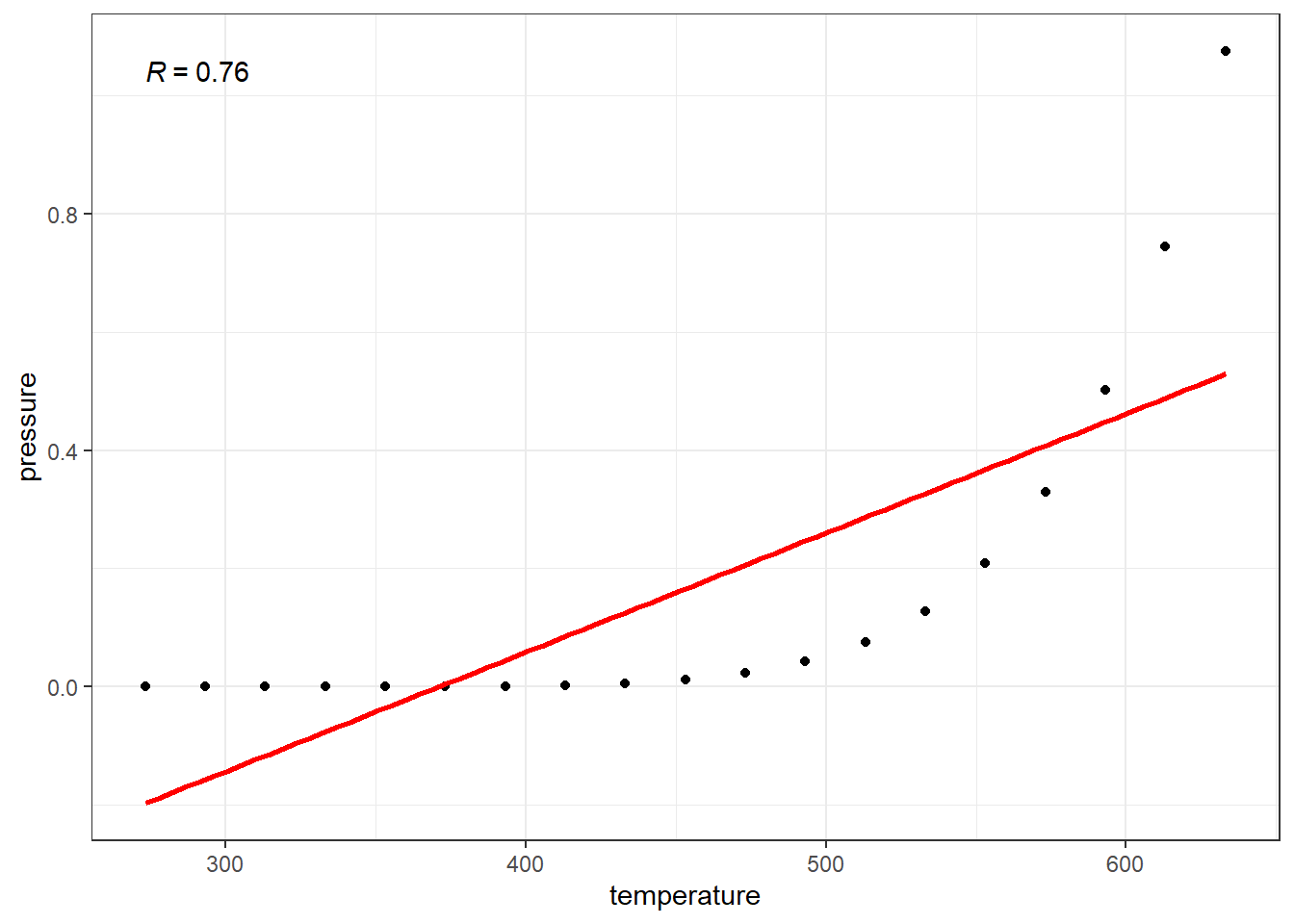

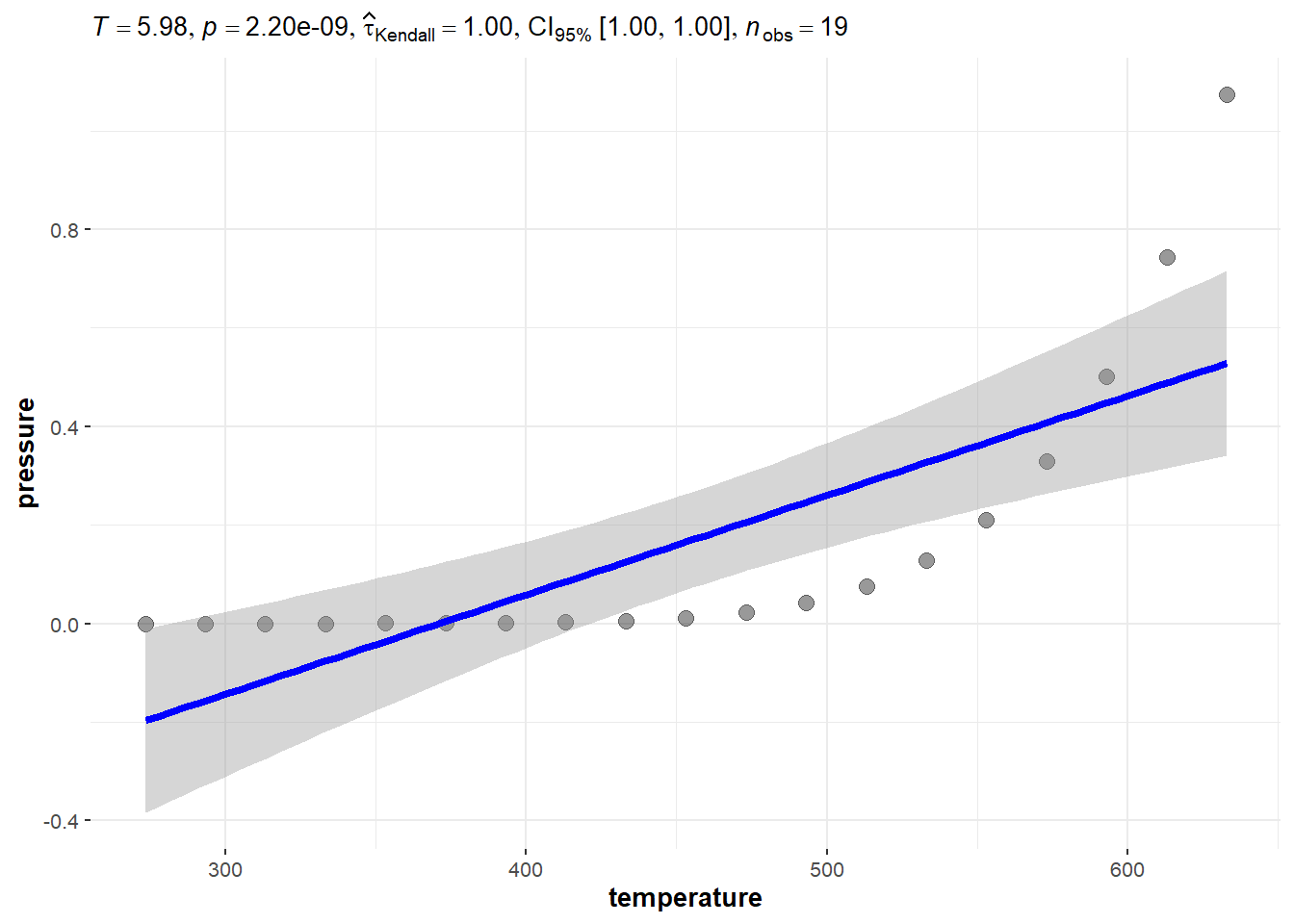

First, let’s look at a purely deterministic system.

pressureis vapor pressure of mercury in mm Hg. (pressure inside a closed system)temperatureis the temperature in \(^\circ C\).

3.3.1 True Pressure Equation

How precise? Well here is the equation for calculating the vapor pressure of an element or molecule.

\[ P = 10^{\left( \displaystyle{A} - \dfrac{B}{C+T} \right)} \]

- \(P\) is vapor pressure.

- \(A\), \(B\), and \(C\) are constants based on the temperature scale, pressure scale, and element/molecule.

- \(T\) is the temperature.

The NIST reports the constants for mercury when pressured is measured in bar and temperature is measured in Kelvin (K)

- \(A = 4.85767\)

- \(B = 3007.129\)

- \(C = -10.001\)

Notice these are constants. No randomness here, no error here.

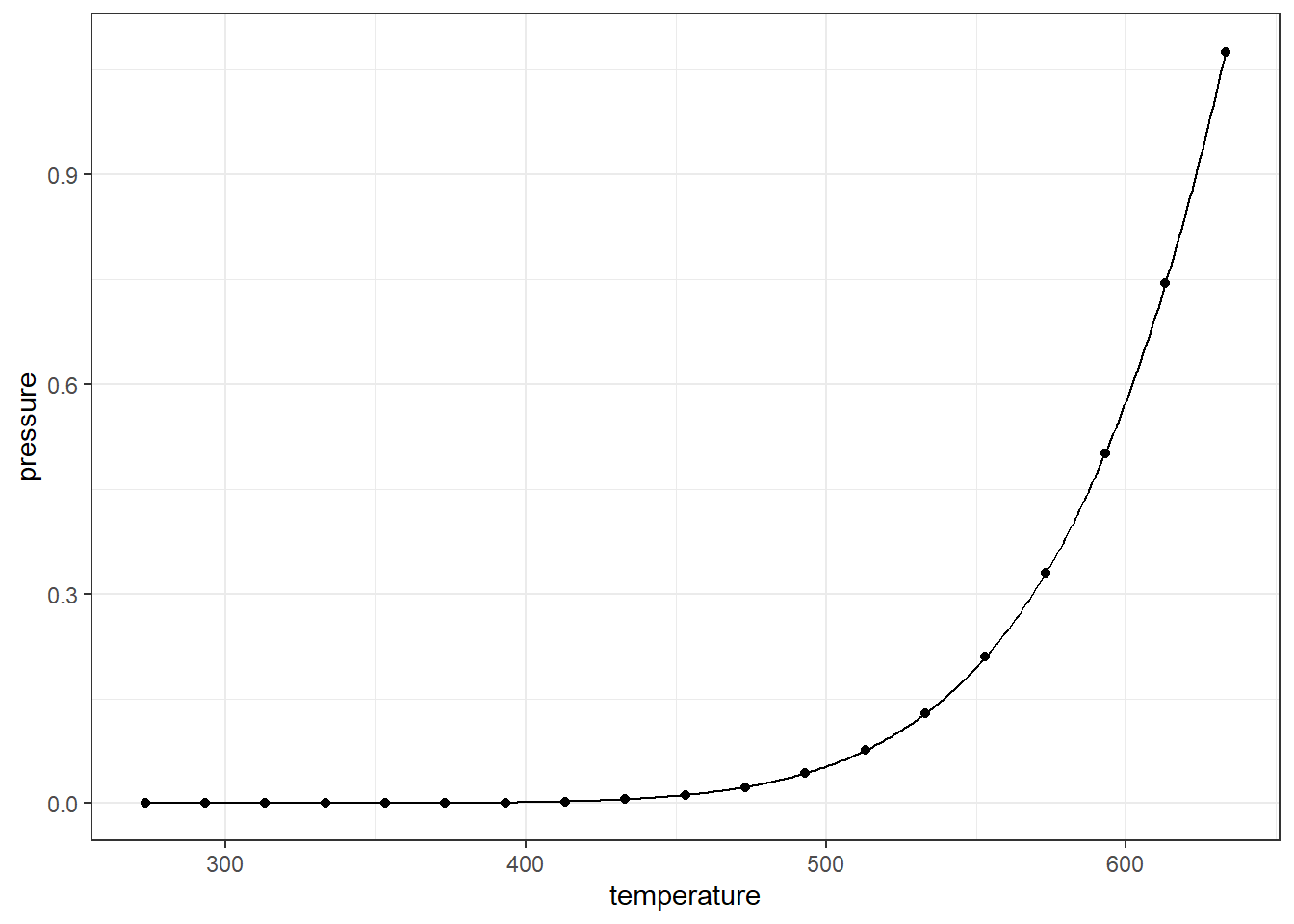

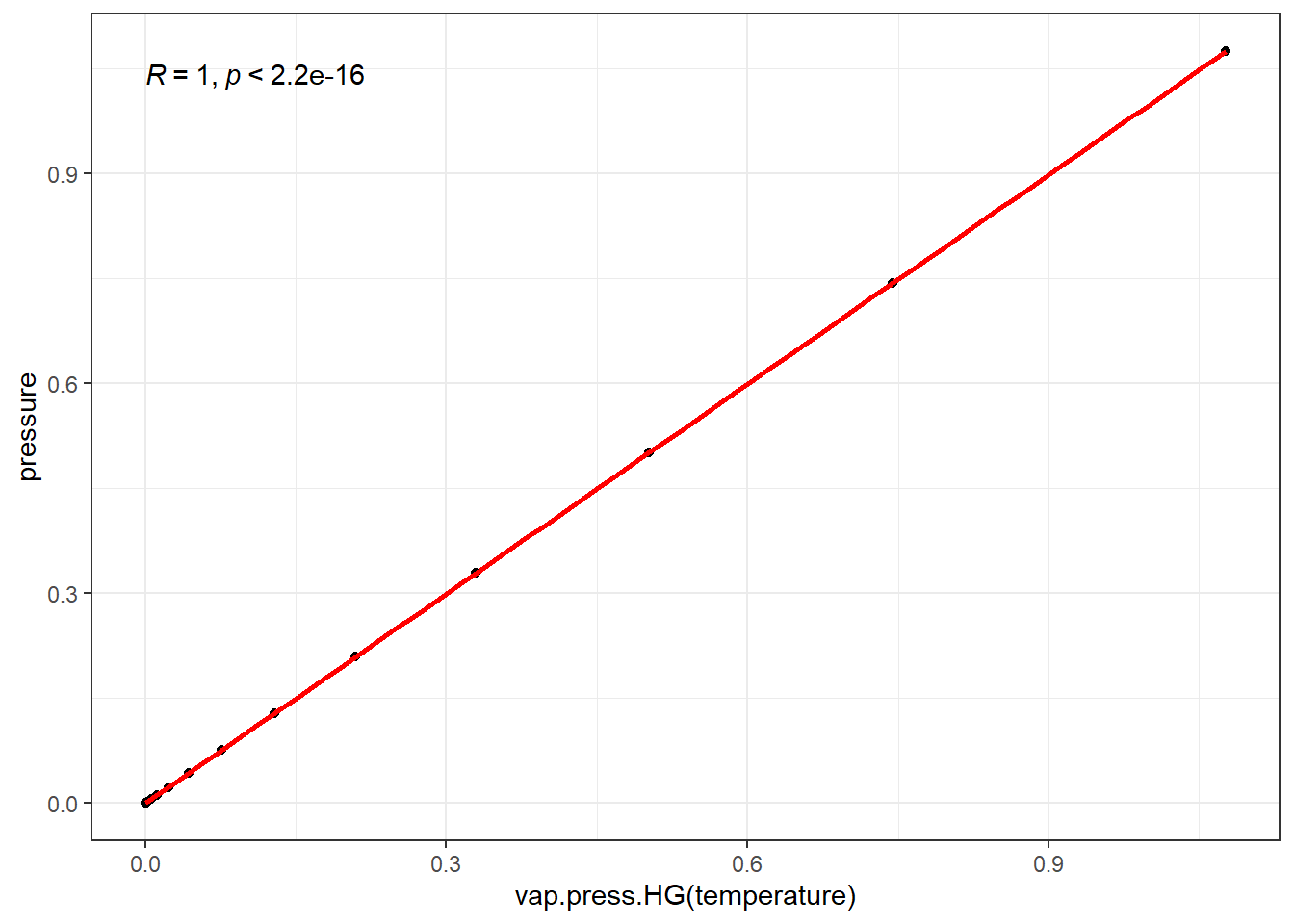

3.3.2 Using the Equation

Next, let’s plot the equation to our points.

The relationship between vapor pressure and temperature seems to be perfectly accounted for by this equation. If we were to have a way to measure the strength of the relationshipo, it would hopefully reflect that perfect relation.

3.3.3 Using Transformations

\[P = 10^{\left( \displaystyle{4.85767} - \dfrac{3007.129}{-10.001+T} \right)}\]

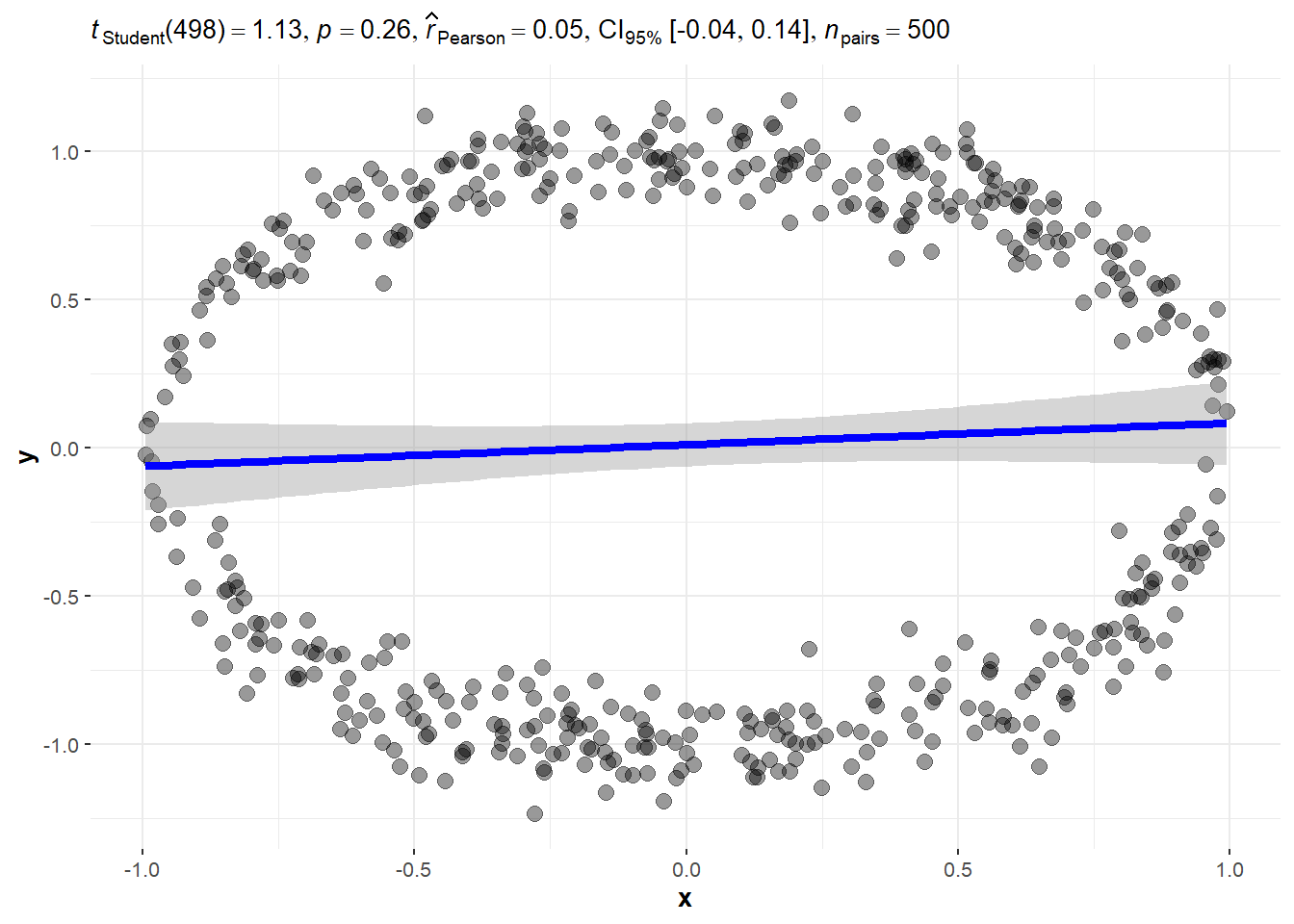

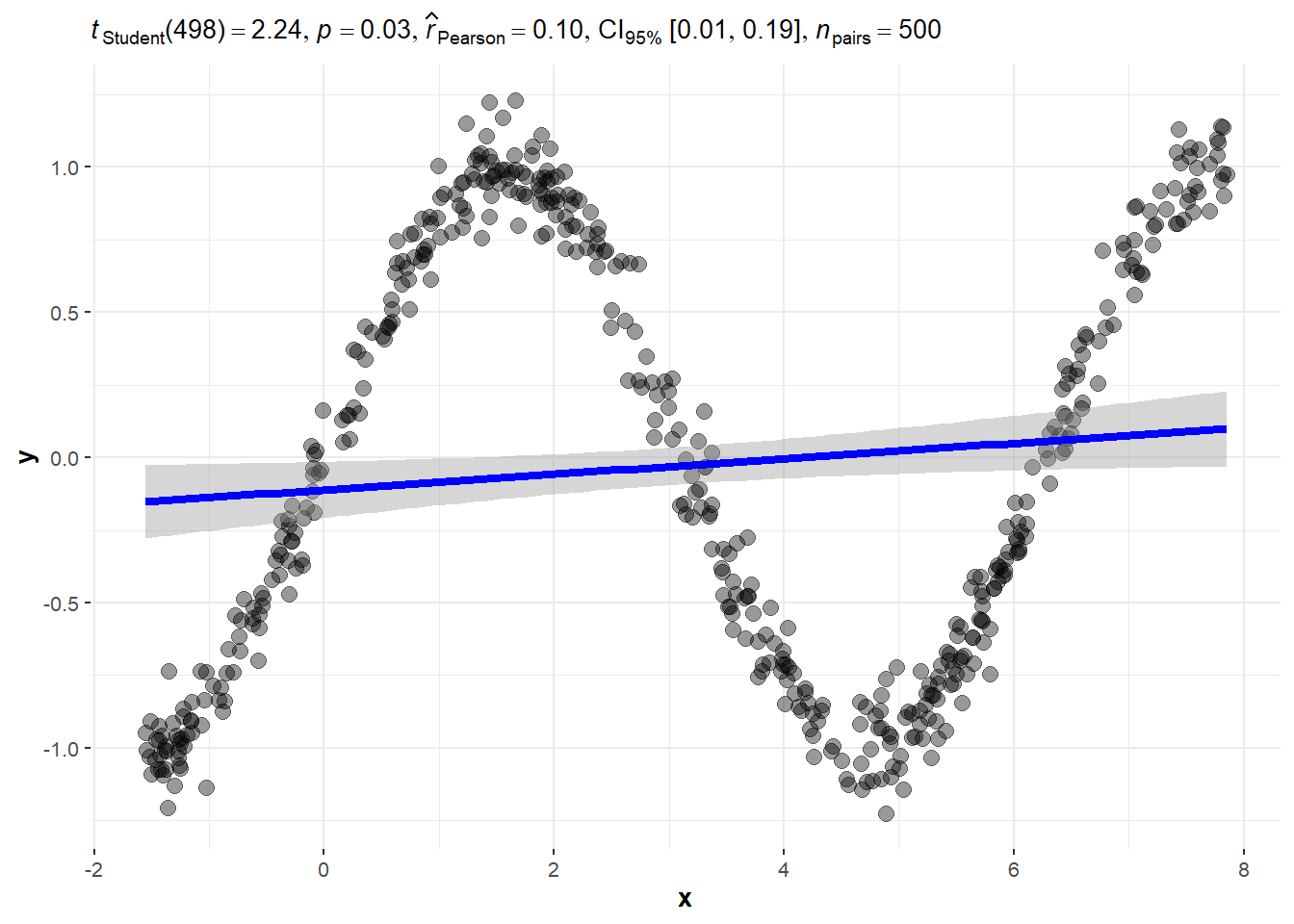

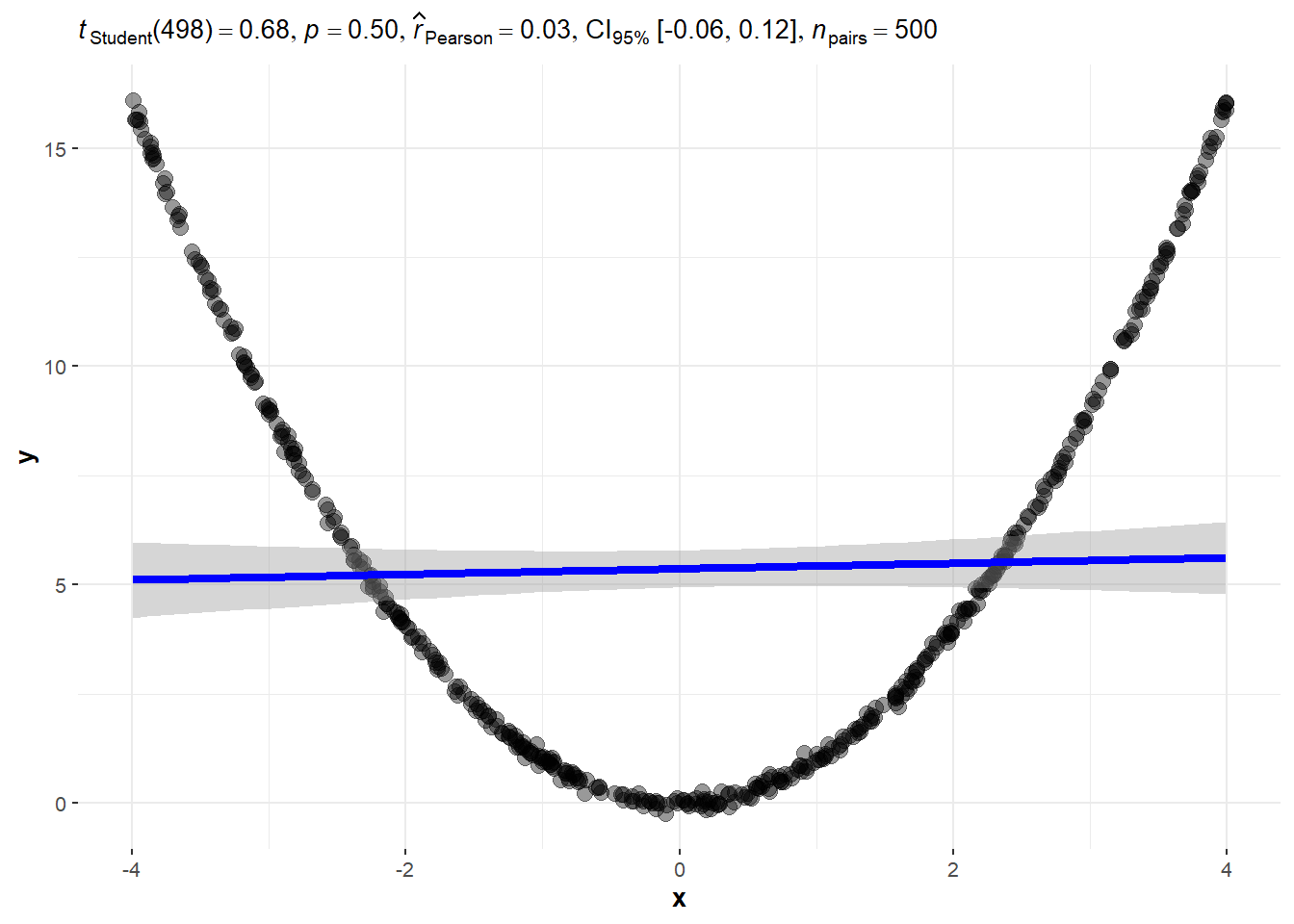

3.4 Zero Linear Relation Examples

- Data falling on a circle. This is a “non-functional” relatioship. The mathematical definition of a function stipulates only one value of \(f(x)\) is the outcome for a value of \(x\). Another way of saying this is that one input value \(x\) should result in one and only one output value \(y\). (Remember that horizontal line rule?) On a circle, two values are possible for an input value of \(x\), except the leftmost and rightmost points of the circle.

- Data from a sine wave.

- Data from a quadratic function.

3.4.1 Circle

3.4.2 Sine Wave

3.4.3 Quadratic

3.5 Kendall’s \(\tau\): A Correlation that identifies certain non-linear

Concordance: \((x_i-x_j)(y_i-y_j)\) is positive. The pair of points indicate a positive trend.

Discordance:\((x_i-x_j)(y_i-y_j)\) is negative. The pair of points indicate a negative trend.

\[\hat{\tau} = \frac{2}{n(n-1)}\sum_{i<j} sgn\left(x_i - x_j\right)sgn\left(y_i - y_j\right)\]

\[ sgn(x) = \begin{cases}1, \quad x > 0\\ -1, \quad x < 0\\ 0, \quad x = 0\end{cases} \]

Note: Kenall’s \(\tau\) should only be used for “monotonic” functions. Monotonic functions can only have an upward trend that is never downward, or vice versa. (Non-decreasing or non-increasing.)

Another Note: There are three commonly used versions of Kendall’s \(\tau\). This one is known as Tau-a. Tau-b should be used for data where there ties.

3.5.1 Alternative Expression for Kendall’s \(\tau\)

We can express Kendall’s \(\tau\) in a more intuitive way. Remember a pair of observations is \((x_i, y_i)\) and \((x_j, y_j)\).

- Let \(n_c\) be the number of concordant pairs of observations.

- Let \(n_d\) be the number of discordant pairs of observations.

- Let \(N\) be the total number of possible unique pairs of observations.

\[\tau = \dfrac{n_c - n_d}{N}\] or

\[\tau = p_c - p_d\]

where

- \(p_c\) is the proportion of times \(x\) and \(y\) increased together.

- \(p_d\) is the proportion of times \(y\) decreased when \(x\) increased.

Note that \(N = \binom{n}{2} = \frac{n(n-1)}{2}\), where \(n\) is the number of observations.

This may make the interpretation a little more graspable.

- \(p_c + p_d\) must equal \(1\). (And \(n_c + n_d\) must equal \(N\)). A consequence of this and the fact that \(\tau = p_c - p_d\)

- \(p_c = \frac{1 + \tau}{2}\)

- \(p_d = \frac{1 -\tau}{2}\)

- When \(\tau\) is positive it is how much more often we see an concordance, i.e., \(x\) increases when \(y\) increases, compared to discordance where \(x\) increases and \(y\) decreases.

- If \(\tau = 0.5\) then \(p_c = 0.75\) and \(p_d = 0.25\). So 75% of the time \(y\) was increasing compared to 25% of the time

- When \(\tau\) is negative its absolute value is how much more likely we are to see a decrease in \(y\) when \(x\) increases.

- If \(\tau = - 0.7\) then \(p_c = 0.15\) and \(p_d = 0.85\), so 85% of the time we saw a decrease in \(y\) whereas only 15% of the time we saw an increase in \(y\)

3.5.2 Kendall’s \(\tau\) with the pressure data

Recall the vapor pressure example.

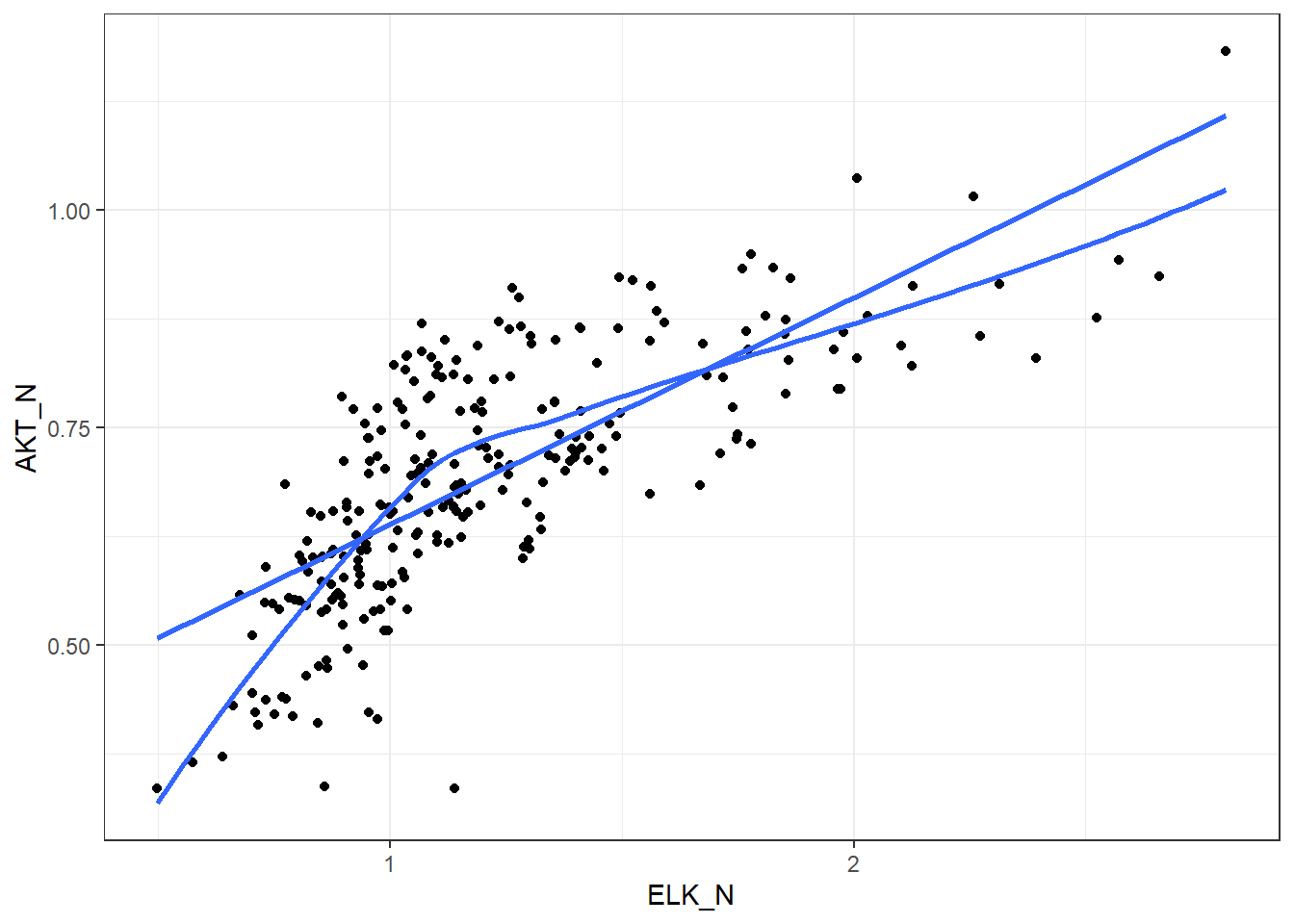

3.5.3 Kendall’s \(\tau\) on some mice proteins data

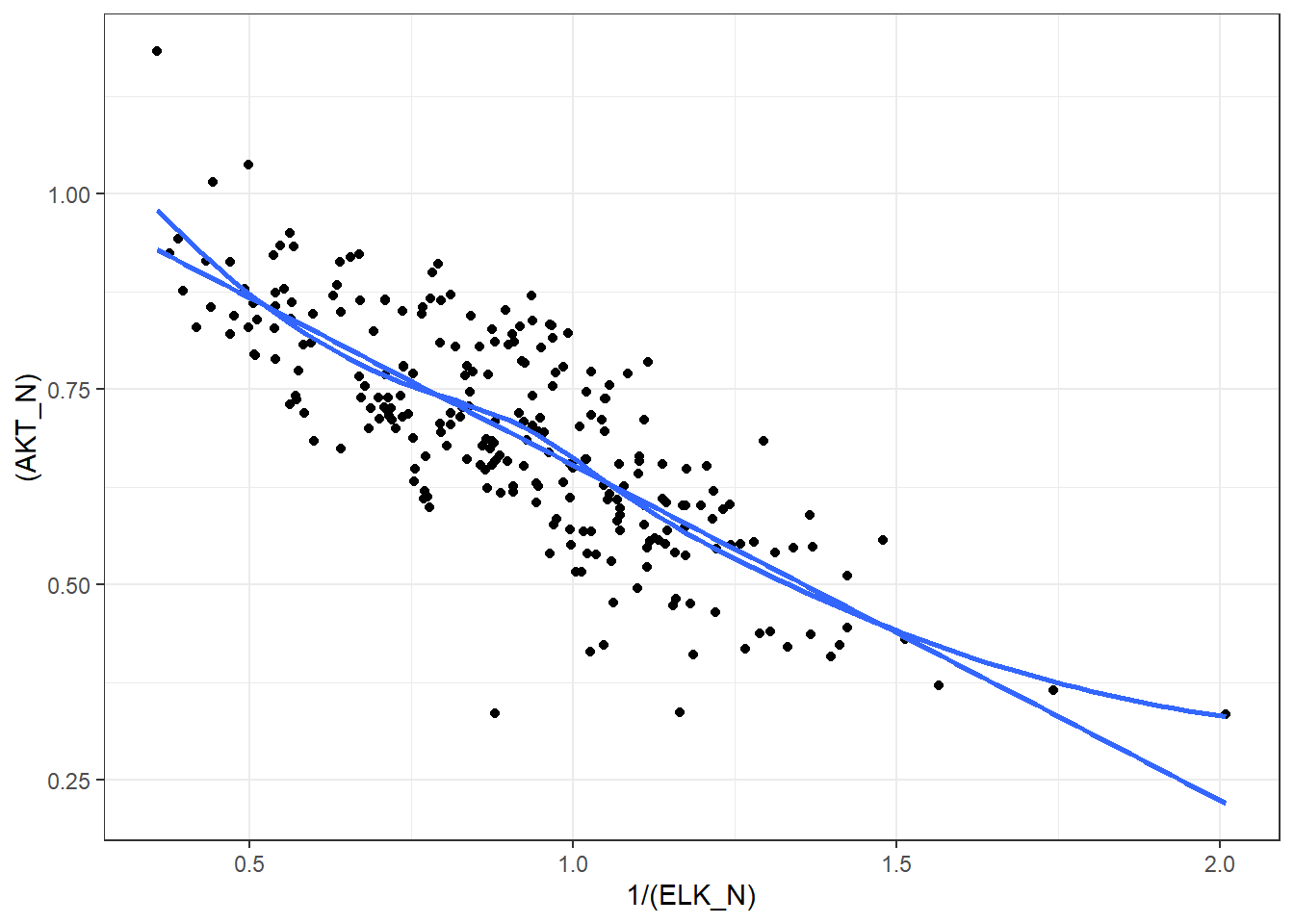

3.5.4 A transformation

3.5.4.1 Monotonic

Definition: In the context of a sequence of numbers, “monotonic” means the sequence is either always increasing or always decreasing. It moves in one direction, without changing direction.

Explanation: Think of a monotonic sequence like walking up or down a staircase. You’re either consistently going upwards (increasing) or consistently going downwards (decreasing). You never switch directions and go up and then down, or down and then up.

Examples:

- Monotonic Increasing: 2, 5, 8, 11, 15 (each number is larger than the one before it)

- Monotonic Decreasing: 10, 7, 4, 1, -2 (each number is smaller than the one before it)

- Not Monotonic: 3, 6, 4, 8 (it increases, then decreases, so it’s not monotonic)

Monotonic Transformation

Definition: A monotonic transformation is a way of changing a set of numbers into a different set of numbers, but in a way that preserves the order of the original set.

Explanation: Imagine you have a line of people arranged from shortest to tallest. A monotonic transformation would be like giving everyone in line platform shoes. Everyone gets taller, but the order from shortest to tallest stays the same.

Examples:

- Original sequence: 2, 5, 8

- Monotonic transformation (adding 3 to each number): 5, 8, 11

- Monotonic transformation (multiplying each number by 2): 4, 10, 16

Why is this important in statistics?

Monotonic transformations are useful in statistics because they can sometimes simplify data analysis without changing the fundamental relationships within the data. For instance, they can be used to:

- Make data easier to work with: Transforming data can sometimes make it easier to visualize or analyze.

- Meet the assumptions of statistical tests: Some statistical tests require data to have certain properties. Monotonic transformations can sometimes help data meet those assumptions.

Key takeaway: Monotonic means “always going in one direction.” A monotonic transformation changes the values in a dataset but keeps the order the same.